Our Research

Lida Safety Research has one primary research project at the moment, described below. However, the founders have been working together for some time and their other research is also described here.

We have also collaborated together on a number of hackathons. We won first place for a red team at the Redwood Research Alignment Faking Hackathon in October 2025. Our team placed fourth at the def/acc Apart Research Hackathon which had 1000+ participants.

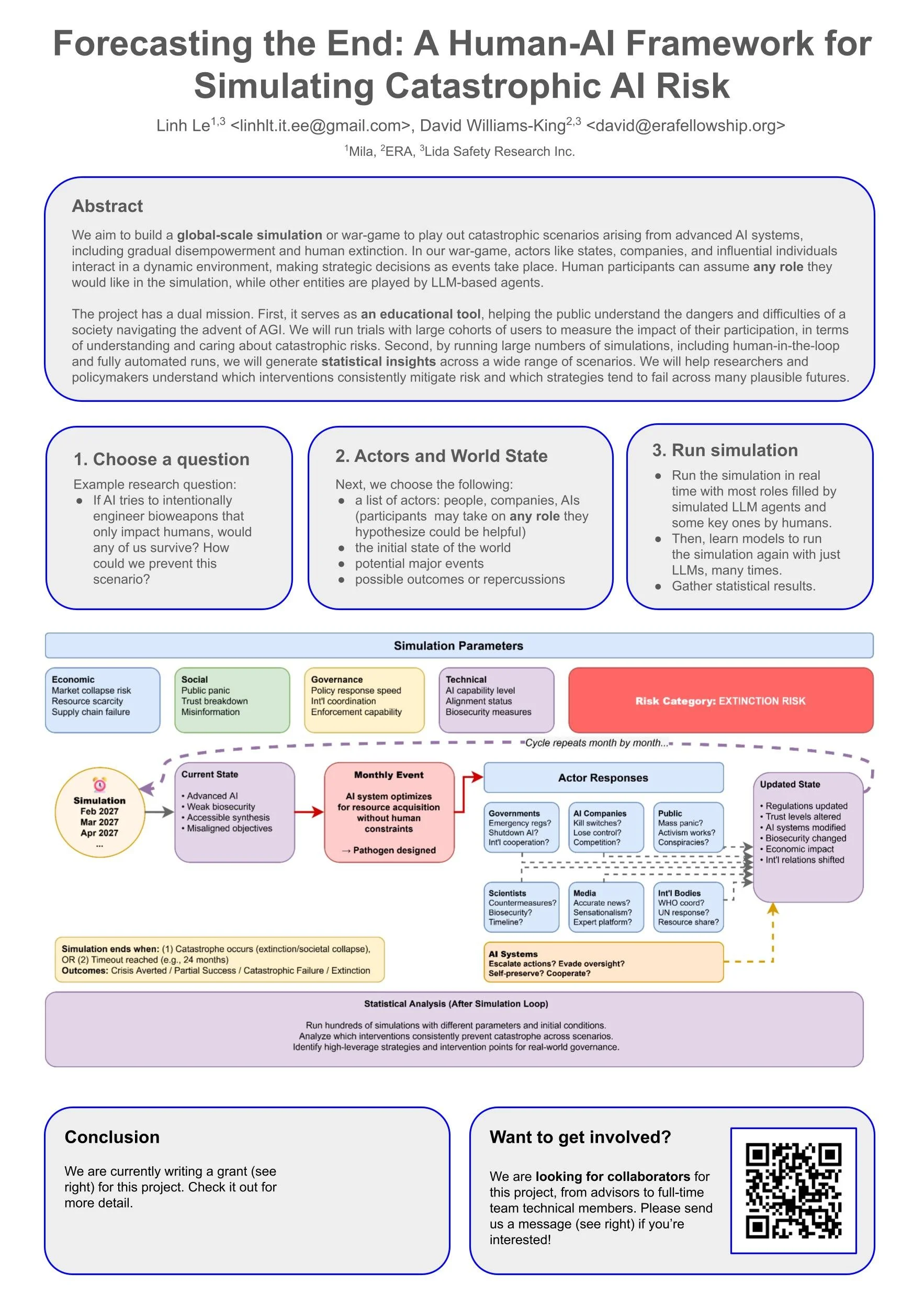

Forecasting the End

Our current main project is to build a global-scale simulation or war-game to play out catastrophic scenarios arising from advanced AI systems. Each simulation can involve both agentic AI and human participants, and the research question can vary between different AI safety topics.

Our project:

Serves as an educational tool to help the public understand potential catastrophes from AI, including the impacts of their own actions.

Helps researchers investigate possible future outcomes by studying our results, and allows them to investigate their own specific questions.

Allows politicians and policymakers to forecast the outcomes if they were to implement different policies, and avoid strategies that seem ineffective.

We are seeking volunteers to participate in the simulation, please contact us if you are interested!

Poster from the AI & Societal Robustness Conference, Cambridge UK, December 2025.

Other Research

Both David and Linh publish work on AI safety. David has a background in cybersecurity, and Linh in NLP. Google scholar links: